As the speed gap between ultra-fast CPU cores and relatively slow memory grows, memory systems can become a major bottleneck in current system performance. In addition, low power consumption is another important design consideration, especially when battery-powered devices are increasing. Low power consumption means longer battery life and equipment usage time. In common applications, memory power typically accounts for a significant portion of the application processor's power consumption, and as memory design becomes more complex, capacity increases, and tiering increases, memory power consumption tends to increase rapidly. Therefore, reducing memory power is very beneficial to extend battery life. In order to better understand the inherent behavior of various applications, it is necessary to explore the characteristics of the memory, establish a memory model to determine whether the application involves frequent memory access operations, and even help predict the performance of the application.

This article provides a simple, economical way to dynamically characterize the computation and memory composition of an application with acceptable accuracy.

Method of describing memory characteristicsIf no memory operations are involved, the CPU utilization should be linear with the frequency of the CPU core, and the application cost (defined as the product of CPU utilization and CPU frequency) should remain constant. But after considering memory access, CPU utilization is no longer linear with kernel frequency. At higher frequencies, memory tends to have a greater impact on performance because the CPU waits for more memory cycles to wait for memory response (here we assume that the memory frequency does not vary with CPU frequency). In this sense, applications can be divided into two types: computationally qualified and memory-defined.

Next, let's talk about how to characterize memory characteristics in three different ways and help determine the CPU utilization of your application. Here, the hardware performance information is collected by viewing the Performance Monitoring Unit (PMU). Therefore, Marvell's approach is only applicable to systems with PMU hardware support.

1. Overall data cache failure rate: Intuitively, higher data cache failure rates mean greater memory traffic. In order to get the value of the data cache failure rate, we need to monitor the access operations and the total number of failures of the primary data cache and the secondary data cache (if any).

2. Main Memory Access Rate: The occupancy of the external memory controller directly indicates the memory utilization. In order to get the main memory access rate value, two types of PMU information must be collected: the total number of cycles occupied by the memory controller; the total number of cycles in the watch window.

3. Data stall rate: Pipeline stalls are mainly caused by data correlation, and data is not available because memory access speed is much lower than CPU speed. Therefore, the number of pauses in the pipeline reflects the flow of the memory. In addition, the number of pipeline stalls also indicates the importance of memory access. Not every memory access has a critical impact on the final performance, so it is useful to keep track of memory access operations that have an impact on performance due to data dependencies. Using this method, you can monitor for events that are stalled by data correlation. In addition, the total number of cycles must be recorded to calculate the data stall rate in each window.

These different methods reflect the characteristics of the memory from different angles. We can use one method or a combination of methods at a reasonable cost to perform performance analysis and make more accurate predictions more effectively.

In the test, we used Marvell's application processor, running a Linux-based operating system, and using a QVGA LCD display as a test platform. The application processor includes a two-level cache. In this study, we focus on MP3, AAC+ and H.264 decoders.

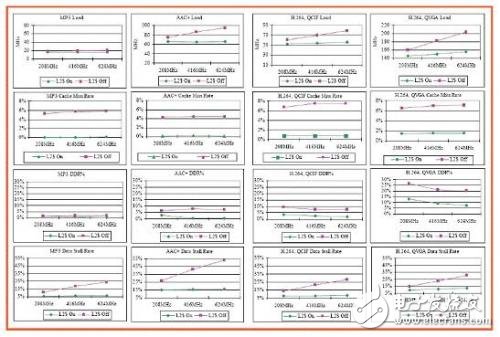

Figure 1 shows the results of comparing three different methods. Each graph consists of two curves: one for the secondary (L2) cache and one for the secondary cache. Three CPU frequencies were tested.

Figure 1: Three methods for characterizing memory characteristics

When the memory access task is not heavy, the CPU utilization is approximately linear with the core frequency. Therefore, when the core frequency changes, the application cost curve (as shown in the load curve) should be parallel to the core frequency curve. The MP3 and AAC+ decoders are good examples when the L2 cache is turned on, because the MP3 and AAC+ decoders only introduce a very small number of memory access operations, and most of these access operations are handled by the L2 cache. In the absence of an L2 cache, the application load increases as the core frequency increases. We also found that the cache miss rate does not change significantly when the CPU frequency changes, regardless of the L2 cache on or off. This means that the overall data cache failure rate is not a very effective measure to represent memory access. .

Intuitively, the memory access rate contains a similar amount of system information as the cache miss rate, since cache invalidation directly triggers memory access. However, the results we have obtained indicate that this is not the case. For example, the H.264 QCIF decoder shows a similar cache failure rate trend as the H.264 QVGA decoder. However, compared to the H.264 QCIF decoder, the H.624 QVGA decoder accounts for a much larger percentage of the application time spent on memory access. This proves once again that it is not enough to only monitor cache failure rates. If the overall cache access is negligible, the cache miss rate does not necessarily result in performance degradation. An MP3 decoder without L2 cache is a good example of this. On the other hand, typical cache miss rates can result in a large number of memory access operations. The H.264 QVGA decoder shows this trend.

Some memory accesses may have a critical impact on performance, while others may have little impact. Regardless of cache miss rate, overall cache access, or main memory access rate, it cannot be used to distinguish whether memory access has a critical impact on performance. Fortunately, we found that the data pause rate is a very good indicator. Obviously, in addition to the MP3 decoder, the data stall rate curve and application cost are synchronized for all other applications. In our experiments, the data pause rate is the best measure to predict application load. The memory access frequency of the MP3 decoder is extremely low. Therefore, in the case where the memory access operation is very small as a whole, even if there are some memory access operations that can have a critical influence, its performance impact is negligible.

UPS used in industrial process control (semiconductor, automobile, etc.), transportation (subway, railway, etc.), infrastructure,

medical / hospital, water plant, mine

Uninterruptible Power Supply,Emergency Power Supply,Power Supply EPS,UPS Uninterruptible Power Supply

Jinan Xinyuhua Energy Technology Co.,Ltd , https://www.xyhenergy.com