Lei Feng Wang: The author of this article Jonathan is the chief scientist of 21CT. He focuses on how machine learning and artificial intelligence are used to make computers more intelligent in text and knowledge. He received a bachelor's degree in psychology and a master's degree in business administration from Texas A&M University and a computer science degree from the University of Texas. Translators / Zhao Xiaohua reviser / Liu Diwei, Zhu Zhenggui editor / Zhou Jianding.

Before the advent of deep learning, the meaning contained in the text was conveyed to the computer through human-designed symbols and structures. This article discusses how deep learning uses vectors to represent semantics, how to represent vectors more flexibly, how to use vector-encoded semantics to complete translations, and where improvements need to be made.

Before the advent of deep learning, the meaning of the text we wrote contained was conveyed to the computer through human-designed symbols and structures. The symbolic methods include WordNet, ConceptNet, and FrameNet to better understand deep learning capabilities through comparison. Then I will discuss how deep learning uses vectors to represent semantics and how to represent vectors more flexibly. Then I will discuss how to use vector-encoded semantics to complete translations, even adding descriptions and using text to answer questions. Finally, summarize what improvements are needed to truly understand human languages ​​with deep learning techniques.

WordNet is probably the most famous symbolic corpus developed by Princeton University. It groups words that are similar in meaning and represents the hierarchical relationship between groups. For example, it thinks that "car" and "car" refer to the same object and belong to the same type of transport.

ConceptNet is a semantic network from MIT. It represents a broader relationship than WordNet. For example, ConceptNet believes that the word "bread" often appears near "toasters." However, this relationship between words is innumerable. Ideally, we would say that "breadmakers" cannot be inserted into "forks."

FrameNet is a project at Berkeley University that attempts to archive semantics using frameworks. The framework represents various concepts and their associated roles. For example, different parts of the child's birthday party framework have different roles such as venues, recreational activities and sugar sources. Another framework is the act of "purchasing", including sellers, buyers, and trading commodities. Computers can "understand" text by searching for keywords that trigger frames. These frameworks need to be created manually, and their triggers also need to be manually linked. We can express a lot of knowledge in this way, but it is difficult to write it out clearly. Because the content is too much, it is too much to write it out completely.

Symbols can also be used to create language models that calculate the probability that a word will appear in a sentence. For example, suppose I just wrote "I've eaten", then the next word is the probability of "Qingfeng steamed stuffed buns", which can be divided by the number of occurrences of "I ate Qingfeng buns" in the corpus divided by "I ate" The number of occurrences is calculated. This type of model is quite useful, but we know that "Qingfeng buns" is very similar to "dogs stuffed buns", at least compared to "rice cookers," but the model does not take advantage of this similarity. There are literally thousands of words used, and the storage space for storing all three-word phrases (quantity of words x number of words x number of words) is also a problem with the use of symbols because there are too many combinations of words and words. So we need a better way.

Use vector representation semanticsDeep learning uses vectors to represent semantics, so the concept is no longer represented by a huge symbol, but by a vector of eigenvalues. Each index of a vector represents a feature of neural network training. The length of the vector is generally around 300. This is a more effective concept representation because the concepts here are made up of features. The two symbols have only the same or different conditions, and the two vectors can be measured by similarity. The corresponding vector of "Qingfeng buns" is very similar to the vector corresponding to "dog obedient buns," but they differ greatly from the vector corresponding to "sedans." Like WordNet processing, similar vectors are grouped into the same class.

There is also an internal structure of the vector. If you subtract the Roman vector from the Italian vector, the result should be very close to the French vector minus the Paris vector. We can use an equation to represent:

Italy - Rome = France - Paris

Another example is:

King - Queen = Man - Woman

We trained the neural network to predict the words near each word and get a vector with these attributes. You can download already-trained vectors directly from Google or Stanford, or train yourself with the Gensim repository. Surprisingly, this method is actually effective, and the word vectors have such intuitive similarities and connections, but in fact they are indeed effective.

Constituting semantics by word vectors

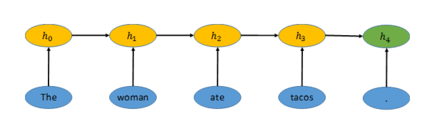

We already have vectors that represent single words. How do we use these words to represent semantics or even form complete sentences? We use a technique called a recurrent neural network (RNN), as shown in the following figure. The sentence "The woman ate tacos." is encoded as a vector with RNN, denoted as h4. The word vector of the word "the" is denoted by h0, and then RNN combines h0 with a word vector representing "woman" to generate a new vector h1. Then the vector h1 continues to be combined with the word vector of the next word "ate" to generate a new vector h2, and so on until the vector h4. The vector h4 shows the complete sentence.

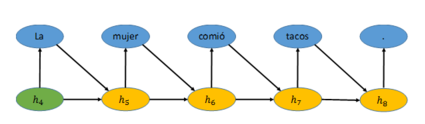

Once the information is encoded as a vector, we can decode it into another form, as shown in the following figure. For example, the RNN can then translate (decode) the sentence represented by the vector h4 into Spanish. It first generates a most likely word based on the existing vector h4. The vector h4 together with the newly generated word "La" generates the vector h5. Based on the vector h5, RNN introduces the next most likely word, "mujer". Repeat this process until a period is generated, and the network structure ends here.

Using this encoder-decoder model for language conversion requires the use of a corpus containing a large number of source and target languages ​​to train the RNN network based on this corpus. These RNNs usually contain very complex internal nodes, and the entire model often has millions of parameters to learn.

We can output the decoded result in any form, such as a parse tree or a description of the image, assuming there is enough image material to contain the description. When you add a description to a picture, you can use a picture to train a neural network to identify objects in the image. Then, the weight value of the output layer of the neural network is represented as a vector of this image, and this vector is used to resolve the description of the image with a decoder.

From synthetic semantics to attention, memory and question and answerThe encoder-decoder method just seems to be a trick, and then we slowly take a look at its application in the actual scene. We can think of the process of decoding as answering the question, “How do you translate this sentence?†Or, you already have a sentence to be translated, and some of the content has been translated, then “What should you do next?â€

To answer these questions, the algorithm first needs to remember some states. In the previously mentioned example, the system only remembers the current vector state h and the last word written. What if we want to use it before we can use it? In the case of machine translation, this means that when selecting the next word, it must be able to trace back the previous state vectors h0, h1, h2, and h3. Create a network structure that meets this need. The neural network learns how to determine which memory state is most relevant before each decision point is determined. We can think of this as a focus of memory.

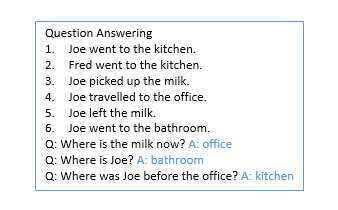

Its significance is that since we can encode concepts and sentences as vectors, and we can use a large number of vectors as the memory elements, the best answer to the problem can be found by searching, then deep learning technology can use text to answer questions. As an easiest example, the inner product operation is performed using the vector representing the problem and the vector representing the memory, and the most consistent result is the best answer to the problem. Another method is to code the problems and facts in a multi-layer neural network and pass the final layer output to a function whose output is the answer. These methods are based on simulated question and answer data to train, and then use the method shown below Weston to answer questions.

The method just discussed is about how to answer questions in a story-telling manner, but some of the important stories of the story are clear and we don't have to write it all down. Imagine a book on the table. How does the computer know that you moved the book while moving the table? In the same way, how did the computer know that it was raining outside the house? As Marvin Minsky asked, how does the computer know that you can pull a box instead of a push box with a rope? Because of these facts we will not all write down, the story will be limited to the knowledge that can be represented by our algorithm. In order to gain this knowledge, our robots will learn through real or simulated experiences.

Robots must go through this real-world experience and code with a deep neural network, based on which common semantics can be built. If the robot always sees the box fall off the table, it will create a neural circuit based on this event. When the mother said, "God, the box has fallen," this circuit will be combined with the word "drop." Then, as a mature robot, when it encounters the sentence "stock has fallen 10 points", it is based on this neural circuit that it should understand its meaning.

Robots also need to combine general real-life experiences with abstract reasoning. Try to understand the meaning of the phrase "He went to the junkyard." WordNet can only provide a set of words related to "went". ConceptNet can associate "went" with "go" but never understand what "go" really means. FrameNet has a self-motion framework that is already very close, but still not enough. Deep learning can encode sentences into vectors and then answer various questions, such as answering "what is he" with the "junk field." However, there is no way to convey the meaning of one person in different places, that is, he is neither here nor elsewhere. We need to have an interface that connects natural language and language logic, or we can use neural networks to encode abstract logic.

Practice: Introductory Resources to Deep LearningThere are many ways to get started. Stanford has an open class with deep learning as an NLP. You can also see Professor Hinton’s course at the Coursera Course. In addition, Prof. Bengio and his friends also wrote an easy-to-understand online textbook to explain deep learning. Before you start programming, if you use Python language, you can use Theano, if you are good at Java language, use Deeplearning4j.

to sum upThe improvement of computer performance and the increasing digitization of our lives have promoted the revolution of deep learning. Deep learning models are successful because they are large enough, often with millions of parameters. Training these models requires enough training data and a lot of calculations. To achieve true intelligence, we also need to go deeper. Deep learning algorithms must learn from real-world experiences and conceptualize this experience, then combine these experiences with abstract reasoning

Lei Feng network (search "Lei Feng network" public concern) Note: This article is authorized by the CSDN authorized Lei Feng network, reproduced please contact the original author.