1. Background

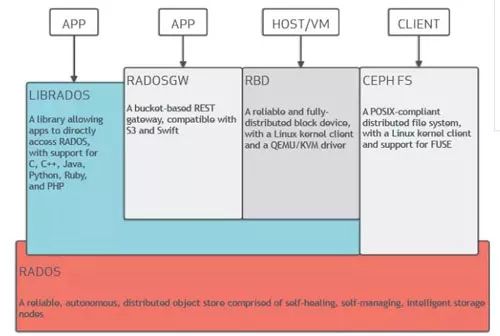

There is no doubt that riding on the development of cloud computing, Ceph is already the hottest software-defined storage open source project today. As shown in the figure below, it can provide three kinds of storage interfaces externally on the same underlying platform: file storage, object storage, and block storage. This article focuses on object storage, namely, radosgw.

Based on Ceph, a privatized storage platform with good security, high availability, and expansibility can be constructed quickly and easily. Although privatized storage platforms are receiving more and more attention due to their security advantages, there are also many drawbacks to privatized storage platforms. For example, in the following scenario, a multinational company needs to access local business data abroad. How do we support this remote data access requirement? If only in the privatized environment, there are two solutions:

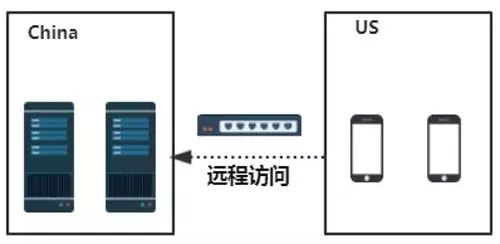

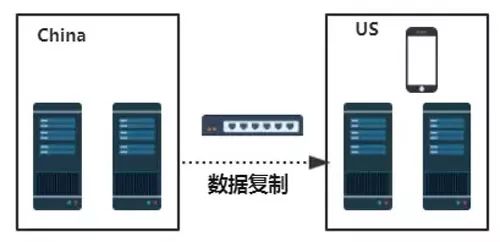

Directly across geographies to access data in local data centers, there is no doubt that this solution will lead to higher access delays.

Self-built data centers in foreign countries continue to asynchronously replicate local data to remote data centers. The disadvantage of this solution is that the cost is too high.

In this scenario, the pure private cloud storage platform does not solve the above problems. However, the adoption of hybrid cloud solutions can better meet these needs. For the scenario of long-distance data access described earlier, we can use the public cloud as a storage point in a remote data center node to asynchronously replicate data from the local data center to the public cloud, and then directly access data in the public cloud through the terminal. This method has great advantages in terms of comprehensive cost and quickness and is suitable for such long-distance data access requirements.

2. Development Status: RGW Cloud Sync Development History

The hybrid cloud mechanism based on Ceph object storage is a good complement to Ceph's ecosystem. Based on this, the community will release RGW Cloud Sync features in this version of Mimic, and initially support the export of data in RGW to a public cloud object storage platform supporting s3 protocol. For example, Tencent Cloud COS used in our test, just like other plug-ins in Mulsite, this feature of RGW Cloud Sync is also made into a brand new synchronization plug-in (currently called aws sync module), compatible with S3 protocol support. The overall development of the RGW Cloud Sync feature is as follows:

Suse contributed the initial version, which only supports simple uploads;

Red Hat has implemented full semantics support on this initial version, such as multipart uploads, deletes, etc., taking into account the possibility of memory explosion when synchronizing large files, and also achieved streaming uploads.

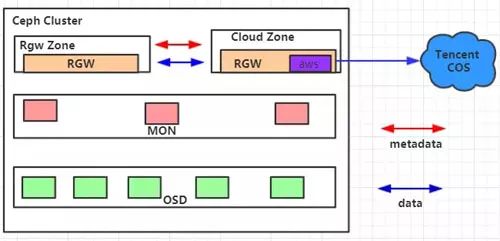

For the public cloud synchronization feature that will be released in the M version of the Ceph community, the actual landing test was used, and feedback and development were conducted based on the problems existing therein. In the actual test process, we set up the following operating environment:

Among them, the Cloud Zone contains a public cloud synchronization plug-in, which is configured as a read-only zone to synchronize the data written in the Rgw Zone across Tencent Cloud Public Object Storage Platform (COS). Successfully synchronized data backup from the RGW to the public cloud platform, and supports free customization to import data to different cloud paths. At the same time, we also improved the synchronization status display function to quickly detect errors that occurred during synchronization and Current lagging data, etc.

3. The core mechanism

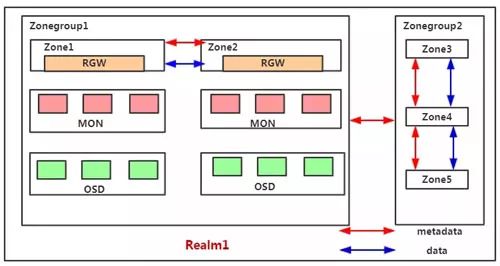

Multisite

The RGW Cloud Sync feature is essentially based on a new async sync module on the Multisite. First look at some of the core mechanisms of Multisite. Multisite is a solution for remote data backup in RGW. In essence, it is a kind of log-based asynchronous replication strategy. The following figure is a schematic of a Multisite.

There are the following basic concepts in Multisite:

Zone: exist in a separate Ceph cluster, provided by a group of rgw services, corresponding to a set of background pool;

Zonegroup: contains at least one zone, zone synchronization data and metadata;

Realm: A stand-alone namespace containing at least one ZoneGroup, synchronized metadata between Zonegroups.

Let's take a look at some of the working mechanisms in Multisite: Data Sync, Async Framework, and Sync Plugin. The Data Sync section mainly analyzes the data synchronization process in the Multisite. The Async Framework section will introduce the Cortex framework in the Multisite. The Sync Plugin section will introduce some synchronization plugins in the Multisite.

Data Sync

Data Sync is used to back up data in a zone group. Data written in a zone is eventually synchronized to all zones in the zone group. A complete Data Sync process consists of the following three steps:

Init: Establishes a log fragmentation relationship between the remote source zone and the local zone. That is, the remote datalog is mapped to the local zone. Datalogs are used to know whether there is any data to be updated.

Build Full Sync Map: Gets the meta information of the remote bucket and establishes the mapping relationship to record the synchronization status of the bucket. If the source zone has no data when configuring the multisite, this step will be skipped.

Data Sync: Start the synchronization of the object data, obtain the data log of the source zone through the RGW api and consume the corresponding bilog to synchronize the data.

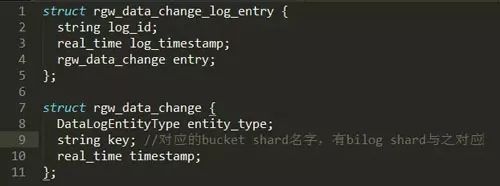

The following is an incremental synchronization of data in a bucket to illustrate the working mechanism of Data Sync. Everyone who knows RGW should know that a bucket instance contains at least one bucket shard. Data Sync is synchronized by bucket shards. Each bucket shard has a datalog shard and bilog shard.

After establishing the correspondence and performing full synchronization, the local zone will record the sync_marker corresponding to each datalog fragment of Sourcezone. After that, the local zone will regularly compare the sync_marker with the max_marker of the remote datalog. If data is still not synchronized, the datalog entry will be consumed through rgw, the corresponding bucket shard will be recorded in the datalog entry, and the corresponding bilog of the bucket shard will be used for data synchronization. . As shown in the following figure, the remote datalog is stored in the format of gw_data_chang_log_entry. We can see that each datalog entry contains a domain such as rgw_data_change, and the key domain included in rgw_data_change is the bucket shard. The name, then you can find the corresponding bilog shard, which consumes bilog for incremental synchronization. The full amount of synchronization is actually not starting the sync_marker, directly from the beginning to consume datalog for data synchronization.

Async Framework

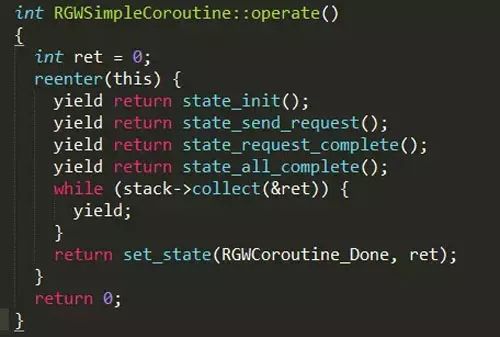

The asynchronous execution framework used in the RGW is developed based on the boost::asio::coroutine library, which is a stackless coroutine. Unlike the common coroutine technology, the Async Framework does not use the ucontext technology to save the current stack information to support the protocol. Instead of using macros to achieve a similar effect, it implements coroutines through several pseudo-keywords (macro) that reenter/yield/fork. RGWCoroutine is an abstract class about coroutines defined in the RGW. It is also a subclass of boost::asio::coroutine. It is used to describe a task flow and contains an implicit state machine to be implemented. RGWCoroutine can call other RGWCoroutines or spawn a parallel RGWCoroutine.

The RGWCoroutine class will contain a RGWCoroutinesStack member. When calling another RGWCoroutine with a call, the corresponding task flow will be stored on the stack. Control will not return to the caller until all task flows are completed. However, spawning a new RGWCoroutine will generate a new task stack to store the task flow. It does not block the current task flow. When a coroutine needs to perform an asynchronous IO operation, it will mark itself as a blocking state, and this IO event will be registered in the task manager. Once the IO is completed, the task manager will unlock the current stack to restore the coroutine. control. The following figure shows a simple coroutine usage example that implements a request processor with a predetermined period.

Sync Plugin

The data synchronization process described in the previous section is to synchronize data from one ceph zone to another ceph zone. We can abstract the process completely, make the data synchronization more generic, and facilitate the addition of different sync modules to migrate data to different sites. Destination. Because the logic of the upper consumption datalog is consistent, only the last step of the upper layer data to the destination is not the same, so we only need to implement the relevant interface for data synchronization and deletion to achieve different synchronization plug-ins. Each plug-in is RGW is called a sync module. Currently, there are four sync modules in Ceph:

Rgw: The default sync module to synchronize data between ceph zones

Log: used to get the object's extended properties and print

Elasticsearch: Used to synchronize meta-information of data to ES to support some search requests

Aws: Mimic version release, used to export data from the RGW to an object storage platform that supports S3 protocol 4

RGW Cloud Sync

Streaming process

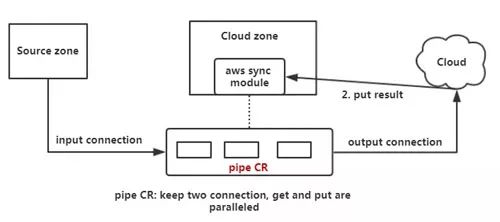

As mentioned earlier, Suse contributed the initial version of RGW Cloud Sync. As shown in the following figure, a synchronization process logically consists of three steps. The first step is to load the remote object via the https connection through the aws sync module. Go to memory, put the object into the cloud, and the cloud will return a put result.

For a small file, this process is no problem, but if the object is relatively large, there is a problem with all load into memory, so Red Hat supports the Streaming process on this basis. Essentially a new coroutine is used, here called pipe CR, which uses a pipeline-like mechanism while keeping two http connections, one for pulling remote objects and one for uploading objects, and these two The process is parallel, which can effectively prevent memory explosion, as shown in the figure below.

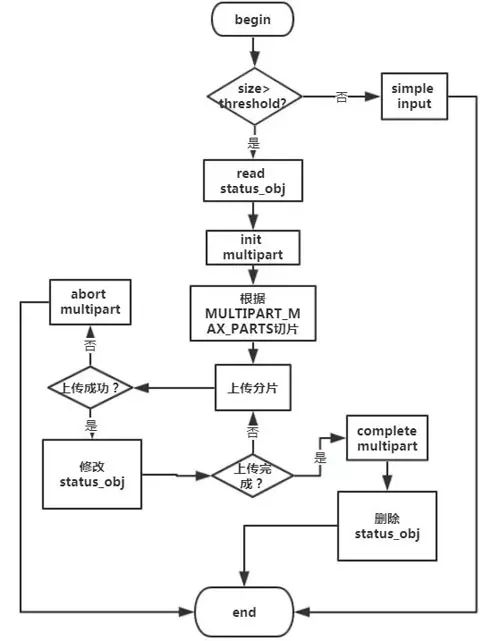

Multipart upload

Multipart upload is based on the Streaming process to support the uploading of large files. Its overall process is as follows:

Json config

The public cloud storage environment is relatively complex and requires more complicated configurations to support the use of the aws sync module, so Red Hat supports json config on this plugin.

Compared to other plug-ins, there are mainly three configuration items, host_style, acl_mapping, and target_path. host_style is the format for configuring domain names. acl_mapping is the synchronization method for configuring the acl information. target_path is the storage point for configuring metadata at the destination. . The following figure shows an actual configuration. It means that the aws zone is configured. The domain name is path-style. The target_path is rgw plus the name of the zone group where it is located, plus its user_id, followed by its bucket name. The final path of the object in the cloud is target_path plus the object name.

5. The follow-up work on RGW Cloud Sync mainly includes the following four items:

The optimization of the synchronization status display, such as displaying the backward datalog, bucket, object, etc., and simultaneously reporting some errors occurred in the synchronization process to Monitor;

Reverse synchronization of data, that is to support the synchronization of public cloud data to the RGW;

Supports the import of RGW data into more public cloud platforms, rather than merely supporting the S3 protocol platform;

Based on this, RGW is used as a bridge to achieve data synchronization between different cloud platforms.

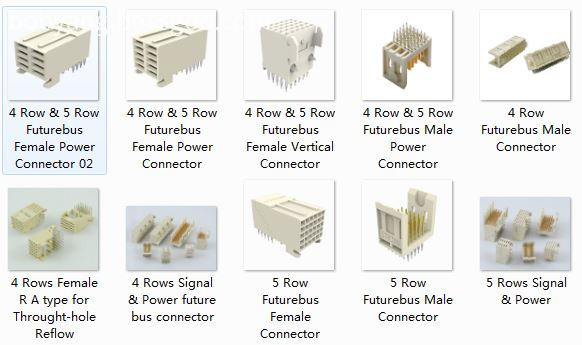

Future Bus connectors Description

Future Bus connectors, which use 2mm style have a mating distance of 10mm. Both Future bus and the upgrade Future bus+ are out-dated.No additional work has been done to upgrade the specification over the years. However we still produce products which meet the Physical and Electrical layers of IEE P896.

Antenk future bus connecto feature

designed in metric dirnension on 2 millimeters grid over 5 rows.

1 Standardized product through EIA (USA), IEC and CECC (international).

2 Selected by IEEE as the interconnection system for Futurebus + / SCI / VicBus.

3 Multi-sources product,use for telecommunication, network, server / workstation market.

4 High tempersture materials SMT compatible.

5 Modular design giving flexibility for system design.

6 Stackable end to end without loss of contact position.

7 High density (more than 2 times as compared to the standard inch based " Euroconnector DIN 41612" ).

8 Tuning Fork female contact concept for higher robustness and improved reliability

(low contact resistance and high normal force).

9 Low insertion force design.

10 Inverse connector system (signal and power).

11 Optimized solder and compliant press-fit terminations for backplane and circuit board connectors.

12 differents mating lengths on signal and 3 on power for standard connector system.

Future Bus connectors Application

Telecom backplane board

Antenk offers a complete line of 5+2 and 8+2 Hard Metric Connectors as well as a complete line of 4 and 5 row Future bus Connectors.

4 and 5 row Future bus Connectors

4 Rows Signal & Power

5 Rows Signal & Power

Female IDC Type 4&5 Rows

Power Connector & Cable

Shroud

Vertical, 5 Rows

Right Aangle, 5 Rows

Vertical, 4 Rows

Right Angle, 4 Rows

5+2 and 8+2 Hard Metric Connectors

2.0mm Future Bus Connector Male DIP

2.0mm Future Bus Connector Female DIP

2.0mm Future Bus Connector Male Press Fit

2.0mm Future Bus Connector Female Press Fit

2.0mm Future Bus Connector Power Type

2mm HM (hardmetric) Connector Introduction

System designed to meet the current and future needs of instrumentation applications giving excellent electrical and mechanical characteristics. It is a high performance, high density system with flexible configuration which offers upgradeability. The connector system is fully supported by Antenk spice models to guarantee choosing the right product to match the application.

ANTENK 2.0mm HARD METRIC CONNECTOR MODULES comply with international IEC 917and IEC 61076-4-101

standards. The connector systems in telecommunication and other industries require hight density connectors to support

larger amounts of data increasingly higher speeds antenk 2mm hardmetric modules offer the solution

Features and Benefits:

This high density connector modules can be stacked end to end without loss of space.

1,ANTENK developed the 2.0mm series under thorough consideration of impedance match, propagation delay,

cross talk, reflection. It is the ideal connector for digital high speed data application.

2,ANTENK offers differend types with inverted mating configuration. The male connector is a fixed module at the

backplane and the female commector is a free component of the plug-in module. The male connector has 5 signal row.

3 The outer shielding rows z and f of the male connector engage the shielding contacts of the famale connector. theshield

is also designed for gas tight, press-fit installation.

4 The connector system offers 15 contact length that utilize the proven press-fit assembly technique. Within the 15

contact length are 3 mating levels, achievable on both the plug-in and rear I / 0 side.

5 Coding system prevents mix-up and wrong mating between male and female connectors.

6 The 2.0mm hard metric connectors and DIN 41612 connectors can be used on the same PC board as both have the

same mating distance.

7 Staggered make-break pin populations for optional hot-swap capability.

8 Rear pin option for through-the-backplane I/O application.

9 High density PCI capability,shield for EMI/RFI protection.

2mm HM (hardmetric) Connector Features:

High density system with small real estate on backplane and daughtercard

Extensive range of signal, power, coaxial and fibre board-to-board and cable-to-board connections

Modular units give flexible configuration

Special versions for VME64 extensions and CompactPCI

Signal contact rating 1.5a fully energized

Universal power module rated at 7.8A/line, 23.4A fully energized

All lines impedance controlled to 50 (single ended) and 100Ω (differential) nominal

Safe design, complies with IEC950 in mated condition

Universal power module is safe in unmated condition

Several performance levels for board and cable connectors with unshielded and shielded versions

Mismatching keys block mating before any contact touch

Small press fit board hole allows maximum track width and minimum signal corruption

1.4 to 5.6mm (0.055 to 0.220 inch) Backplane thickness range

2mm HM (hardmetric) Connector Applications

Communications & Networking, Computers & Computer Peripherals, Sensing & Instrumentation

Metric Connectors,2.0Mm Hard Metric Connectors,Hard Metric Connectors,Hard Metric Female Connector,2.0mm Future Bus Connector Male DIP,2.0mm Future Bus Connector Female DIP

ShenZhen Antenk Electronics Co,Ltd , https://www.antenkconn.com